1: Performance

Categories: Programming system

Tags: System

What is Performance?

For simple program it’s speed(how fast your program runs) but what is speed for long running programs? Here we introduce two terms Latency and Throughput.

- Latency(response time) : How fast does the server respond to my request?

- Throughput: Number of requests served/unit time.

//example code

void dummy_server()

{

while(request = next_request())

{

respond(request);

}

}

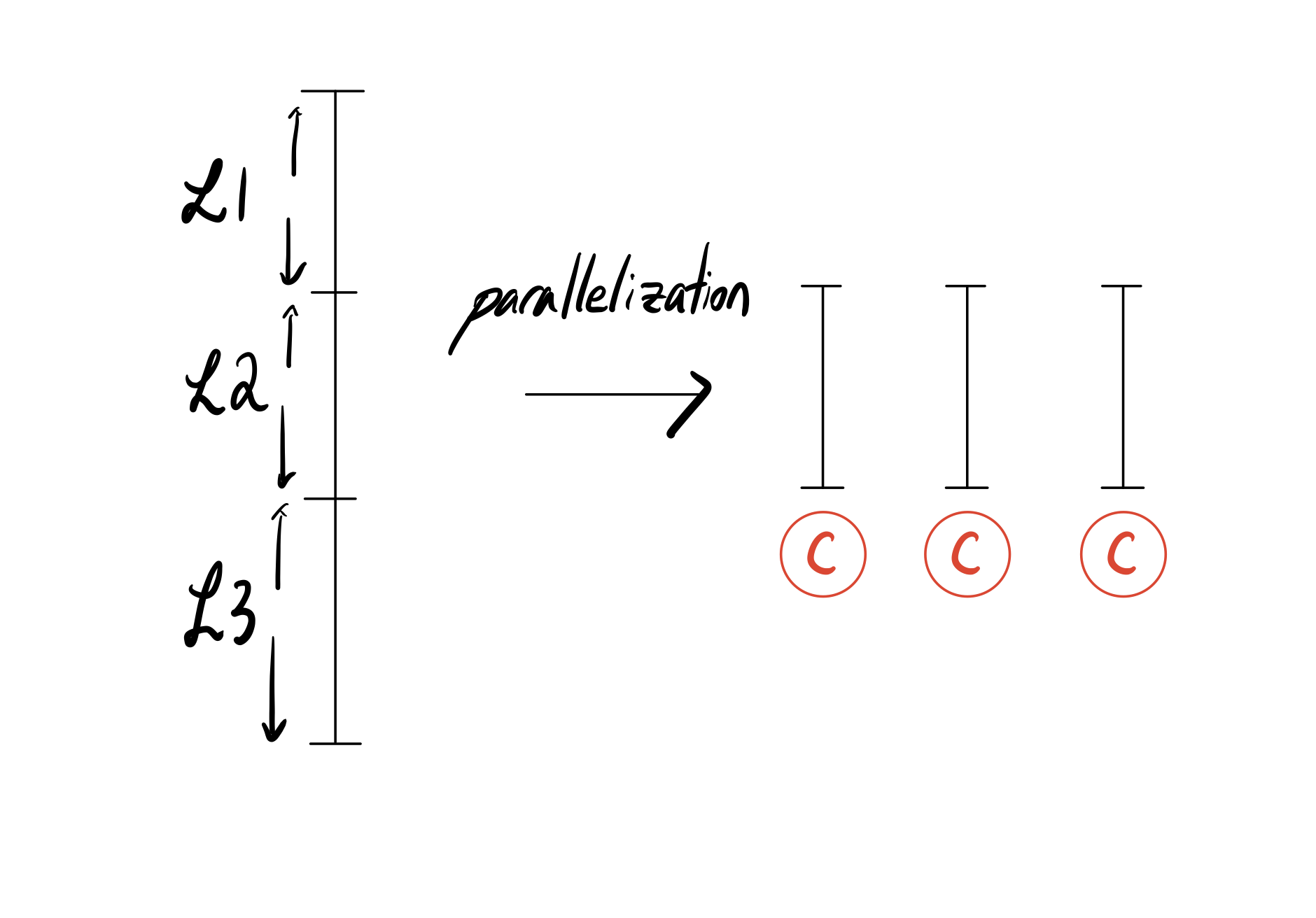

Latency for req. 1 —> Latency for req. 2 —> Latency for req. 3

Throughput = \(\frac{3}{L1+L2+L3} \)

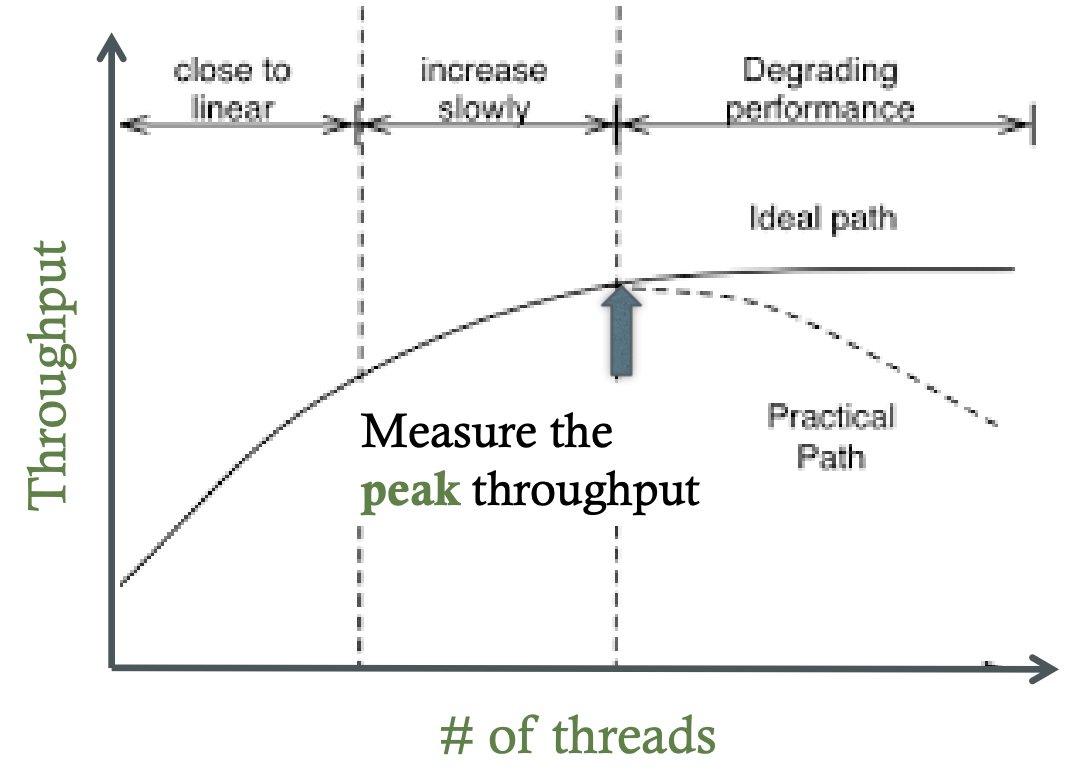

As we parallelize the code we have better throughputs, but worse latency. By acquiring a faster CPU, we could improve both the latency and the throughput. Parallelization generally improves the throughput, however as a graph on the right suggests, when the parallelization reaches a certain point, it reduces the throughput. Synchronizing and sharing data across different threads and processes are expensive operations and there are many more factors that could have resulted in performance reduction. We would explore this in more detail in following posts.

Leave a comment